# Copyright (c) Meta Platforms, Inc. and affiliates. All rights reserved.

本教學課程展示cameras、transforms及so3API。

所處理的問題定義如下

給定一個包含 $N$ 個相機的光學系統,其中外參為 $\{g_1, ..., g_N | g_i \in SE(3)\}$,以及一組相對相機位置 $\{g_{ij} | g_{ij}\in SE(3)\}$,可對隨機選取的相機配對 $(i, j)$ 的座標系進行對映,搜尋與相對相機移動一致的絕對外參 $\{g_1, ..., g_N\}$。

更正式的說法:$$ g_1, ..., g_N = {\arg \min}_{g_1, ..., g_N} \sum_{g_{ij}} d(g_{ij}, g_i^{-1} g_j), $$, 其中 $d(g_i, g_j)$ 為一個合適的指標,用於比較相機 $g_i$ 和 $g_j$ 的外參。

以視覺方式說明,問題如下所述。下方的圖片說明了最佳化開始時的狀況。真實相機以紫色繪製,而初始隨機化的預測相機以橘色繪製:

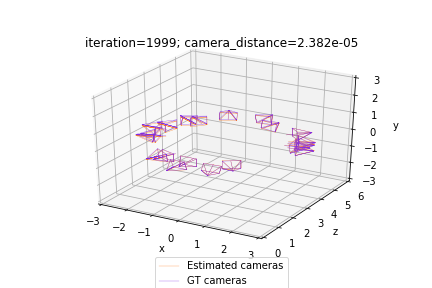

最佳化會透過最小化一組相對相機之間的差異,將預測 (橘色) 相機與真實 (紫色) 相機對齊。因此,問題的解決方案應如下所示:

實務上,相機外參 $g_{ij}$ 及 $g_i$ 會使用 SfMPerspectiveCameras 類別的物件表示,並使用對應的旋轉和轉換矩陣 R_absolute 和 T_absolute 初始化,這些矩陣定義了外參 $g = (R, T); R \in SO(3); T \in \mathbb{R}^3$。為了確保 R_absolute 是有效的旋轉矩陣,我們使用旋轉的軸角表示 log_R_absolute 的指數對映 (使用 so3_exp_map 實作) 來表示它。

請注意,此問題的解決方案只能恢復至未知的整體剛體轉換 $g_{glob} \in SE(3)$。因此,為了簡化起見,我們假設知道第一個相機 $g_0$ 的絕對外參。我們將 $g_0$ 設定為平凡相機 $g_0 = (I, \vec{0})$。

確保已安裝torch及torchvision。如果未安裝pytorch3d,請使用以下儲存格安裝

import os

import sys

import torch

need_pytorch3d=False

try:

import pytorch3d

except ModuleNotFoundError:

need_pytorch3d=True

if need_pytorch3d:

if torch.__version__.startswith("2.2.") and sys.platform.startswith("linux"):

# We try to install PyTorch3D via a released wheel.

pyt_version_str=torch.__version__.split("+")[0].replace(".", "")

version_str="".join([

f"py3{sys.version_info.minor}_cu",

torch.version.cuda.replace(".",""),

f"_pyt{pyt_version_str}"

])

!pip install fvcore iopath

!pip install --no-index --no-cache-dir pytorch3d -f https://dl.fbaipublicfiles.com/pytorch3d/packaging/wheels/{version_str}/download.html

else:

# We try to install PyTorch3D from source.

!pip install 'git+https://github.com/facebookresearch/pytorch3d.git@stable'

# imports

import torch

from pytorch3d.transforms.so3 import (

so3_exp_map,

so3_relative_angle,

)

from pytorch3d.renderer.cameras import (

SfMPerspectiveCameras,

)

# add path for demo utils

import sys

import os

sys.path.append(os.path.abspath(''))

# set for reproducibility

torch.manual_seed(42)

if torch.cuda.is_available():

device = torch.device("cuda:0")

else:

device = torch.device("cpu")

print("WARNING: CPU only, this will be slow!")

如果使用Google Colab,擷取 utils 檔案以繪製相機場景和真實相機位置

!wget https://raw.githubusercontent.com/facebookresearch/pytorch3d/main/docs/tutorials/utils/camera_visualization.py

from camera_visualization import plot_camera_scene

!mkdir data

!wget -P data https://raw.githubusercontent.com/facebookresearch/pytorch3d/main/docs/tutorials/data/camera_graph.pth

或者如果要在本機執行,取消註解並執行下列儲存格

# from utils import plot_camera_scene

# load the SE3 graph of relative/absolute camera positions

camera_graph_file = './data/camera_graph.pth'

(R_absolute_gt, T_absolute_gt), \

(R_relative, T_relative), \

relative_edges = \

torch.load(camera_graph_file)

# create the relative cameras

cameras_relative = SfMPerspectiveCameras(

R = R_relative.to(device),

T = T_relative.to(device),

device = device,

)

# create the absolute ground truth cameras

cameras_absolute_gt = SfMPerspectiveCameras(

R = R_absolute_gt.to(device),

T = T_absolute_gt.to(device),

device = device,

)

# the number of absolute camera positions

N = R_absolute_gt.shape[0]

def calc_camera_distance(cam_1, cam_2):

"""

Calculates the divergence of a batch of pairs of cameras cam_1, cam_2.

The distance is composed of the cosine of the relative angle between

the rotation components of the camera extrinsics and the l2 distance

between the translation vectors.

"""

# rotation distance

R_distance = (1.-so3_relative_angle(cam_1.R, cam_2.R, cos_angle=True)).mean()

# translation distance

T_distance = ((cam_1.T - cam_2.T)**2).sum(1).mean()

# the final distance is the sum

return R_distance + T_distance

def get_relative_camera(cams, edges):

"""

For each pair of indices (i,j) in "edges" generate a camera

that maps from the coordinates of the camera cams[i] to

the coordinates of the camera cams[j]

"""

# first generate the world-to-view Transform3d objects of each

# camera pair (i, j) according to the edges argument

trans_i, trans_j = [

SfMPerspectiveCameras(

R = cams.R[edges[:, i]],

T = cams.T[edges[:, i]],

device = device,

).get_world_to_view_transform()

for i in (0, 1)

]

# compose the relative transformation as g_i^{-1} g_j

trans_rel = trans_i.inverse().compose(trans_j)

# generate a camera from the relative transform

matrix_rel = trans_rel.get_matrix()

cams_relative = SfMPerspectiveCameras(

R = matrix_rel[:, :3, :3],

T = matrix_rel[:, 3, :3],

device = device,

)

return cams_relative

最後,我們開始最佳化絕對相機。

我們使用帶動能的 SGD,且針對 log_R_absolute 和 T_absolute 進行最佳化。

如前所述,log_R_absolute 是我們絕對相機的旋轉部分的軸角表示方式。我們可以使用下列方式取得對應到 log_R_absolute 的 3x3 旋轉矩陣 R_absolute

R_absolute = so3_exp_map(log_R_absolute)

# initialize the absolute log-rotations/translations with random entries

log_R_absolute_init = torch.randn(N, 3, dtype=torch.float32, device=device)

T_absolute_init = torch.randn(N, 3, dtype=torch.float32, device=device)

# furthermore, we know that the first camera is a trivial one

# (see the description above)

log_R_absolute_init[0, :] = 0.

T_absolute_init[0, :] = 0.

# instantiate a copy of the initialization of log_R / T

log_R_absolute = log_R_absolute_init.clone().detach()

log_R_absolute.requires_grad = True

T_absolute = T_absolute_init.clone().detach()

T_absolute.requires_grad = True

# the mask the specifies which cameras are going to be optimized

# (since we know the first camera is already correct,

# we only optimize over the 2nd-to-last cameras)

camera_mask = torch.ones(N, 1, dtype=torch.float32, device=device)

camera_mask[0] = 0.

# init the optimizer

optimizer = torch.optim.SGD([log_R_absolute, T_absolute], lr=.1, momentum=0.9)

# run the optimization

n_iter = 2000 # fix the number of iterations

for it in range(n_iter):

# re-init the optimizer gradients

optimizer.zero_grad()

# compute the absolute camera rotations as

# an exponential map of the logarithms (=axis-angles)

# of the absolute rotations

R_absolute = so3_exp_map(log_R_absolute * camera_mask)

# get the current absolute cameras

cameras_absolute = SfMPerspectiveCameras(

R = R_absolute,

T = T_absolute * camera_mask,

device = device,

)

# compute the relative cameras as a composition of the absolute cameras

cameras_relative_composed = \

get_relative_camera(cameras_absolute, relative_edges)

# compare the composed cameras with the ground truth relative cameras

# camera_distance corresponds to $d$ from the description

camera_distance = \

calc_camera_distance(cameras_relative_composed, cameras_relative)

# our loss function is the camera_distance

camera_distance.backward()

# apply the gradients

optimizer.step()

# plot and print status message

if it % 200==0 or it==n_iter-1:

status = 'iteration=%3d; camera_distance=%1.3e' % (it, camera_distance)

plot_camera_scene(cameras_absolute, cameras_absolute_gt, status)

print('Optimization finished.')

在本教學課程中,我們學習如何初始化一批 SfM 相機,為束調整設定損失函數,以及執行最佳化迴圈。